马帮数据集成到MySQL案例解析

在本次技术分享中,我们将深入探讨如何通过轻易云数据集成平台,将马帮的MSKU列表数据高效、可靠地集成到MySQL数据库。从API接口调用的实现,到数据格式转换及异常处理机制,一一详述,以期为有类似需求的企业提供参考。

本方案命名为“马帮MSKU列表(listing)=>MYSQL”,强调了从马帮系统获取的数据将被批量写入至MySQL,确保每条记录都不丢失。整个过程依赖于几个关键特性:定时抓取、分页处理、高吞吐量写入和智能监控系统。

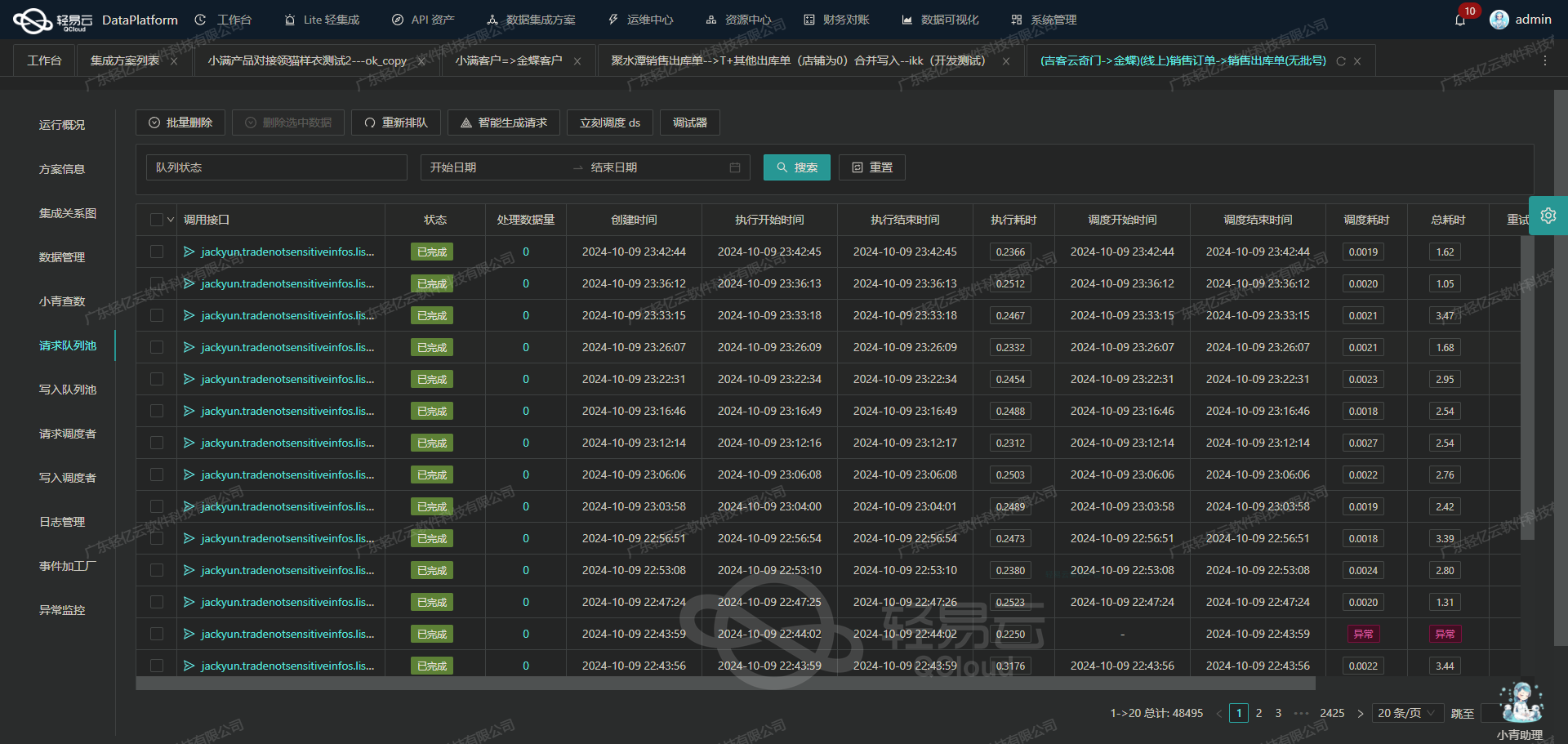

首先,通过调用hwc-get-listing API,从马帮获取实时更新的MSKU列表。这一步骤需要妥善处理接口限流和分页问题,确保大规模数据查询的稳定性和连续性。具体实现过程中,为避免遗漏数据,会设置有效的重试机制,并结合集中监控与告警功能进行实时跟踪。

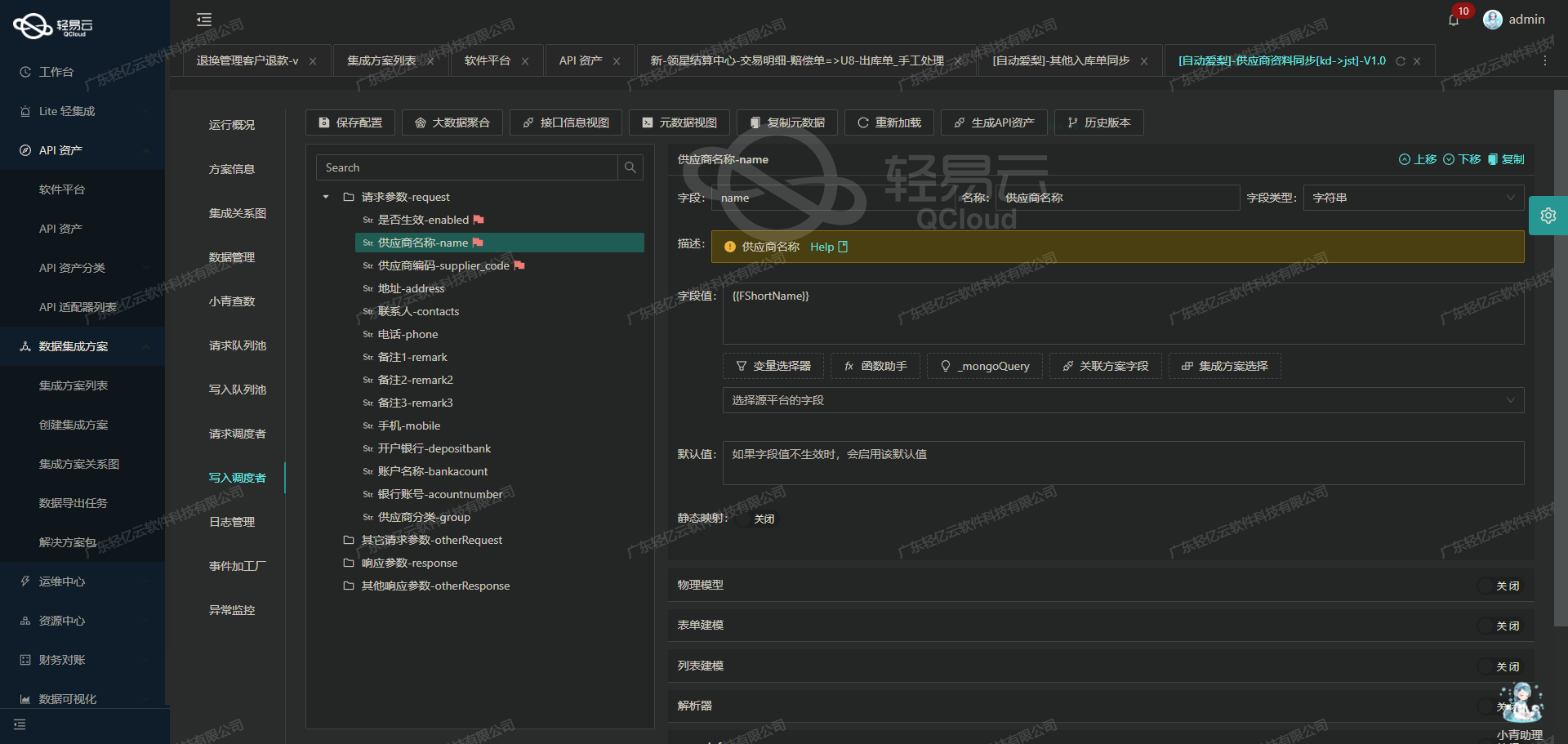

接下来,对获取的数据进行必要的数据质量监控和自定义转换逻辑,以解决由于业务需求或两者之间的数据结构差异带来的兼容性问题。例如,可以采用轻易云平台的可视化设计工具,将JSON格式转化为符合MySQL表结构要求的数据对象,并对字段值进行相应映射及清洗操作。

最后,通过batchexecute API,实现大量MSKU记录快速而可靠地同步到目标MySQL数据库中。在此环节,高吞吐量能力得到了充分应用,有助于大幅提升整体效率。此外,还需注意集成过程中潜在异常情况,如网络中断或数据库写入失败等,均须预置完善的错误检测与重试策略,保障任务最终顺利完成。

以上便是此次案例分析开篇所覆盖的重要技术要点。在后续内容中,我们会更进一步挖掘具体实施细则,以及编码示例与性能优化建议。

调用马帮接口hwc-get-listing获取并加工数据

在数据集成的生命周期中,调用源系统接口是至关重要的第一步。本文将深入探讨如何通过轻易云数据集成平台调用马帮接口hwc-get-listing,并对获取的数据进行加工处理。

接口配置与调用

首先,我们需要配置元数据以便正确调用马帮的hwc-get-listing接口。以下是该接口的元数据配置:

{

"api": "hwc-get-listing",

"effect": "QUERY",

"method": "POST",

"number": "platformSku",

"id": "id",

"name": "shipmentId",

"idCheck": true,

"request": [

{"field": "page", "label": "当前页数", "type": "string", "value": "1"},

{"field": "pageSize", "label": "分页返回数据", "type": "string", "value": "500"}

],

"autoFillResponse": true,

"beatFlat": ["shopList"]

}该配置文件定义了API的基本信息和请求参数。我们使用POST方法发送请求,并设置分页参数page和pageSize,确保每次请求返回500条记录。

数据请求与清洗

在实际操作中,我们通过轻易云平台发送POST请求到马帮的hwc-get-listing接口。以下是一个示例代码片段,用于发起请求并处理响应:

import requests

import json

url = 'https://api.mabang.com/hwc-get-listing'

headers = {'Content-Type': 'application/json'}

payload = {

'page': '1',

'pageSize': '500'

}

response = requests.post(url, headers=headers, data=json.dumps(payload))

data = response.json()

# 检查响应状态

if response.status_code == 200 and data['success']:

listings = data['data']['shopList']

else:

raise Exception("API调用失败或返回错误")在这个示例中,我们首先构建请求头和请求体,然后发送POST请求。如果响应成功且包含有效数据,我们将其存储在变量listings中。

数据转换与写入

接下来,我们需要对获取的数据进行清洗和转换,以便写入目标数据库(例如MySQL)。假设我们需要提取每个商品的SKU、ID和发货ID,并将其写入MySQL数据库:

import mysql.connector

# MySQL数据库连接配置

db_config = {

'user': 'your_username',

'password': 'your_password',

'host': 'your_host',

'database': 'your_database'

}

# 建立数据库连接

conn = mysql.connector.connect(**db_config)

cursor = conn.cursor()

# 清洗和转换数据

for item in listings:

platform_sku = item.get('platformSku')

id_ = item.get('id')

shipment_id = item.get('shipmentId')

# 插入数据到MySQL

cursor.execute("""

INSERT INTO your_table (platform_sku, id, shipment_id)

VALUES (%s, %s, %s)

ON DUPLICATE KEY UPDATE

platform_sku=VALUES(platform_sku),

shipment_id=VALUES(shipment_id)

""", (platform_sku, id_, shipment_id))

# 提交事务并关闭连接

conn.commit()

cursor.close()

conn.close()在这个代码片段中,我们首先建立与MySQL数据库的连接,然后遍历从API获取的数据列表,将所需字段提取出来并插入到数据库中。如果记录已经存在,则更新相应字段。

自动填充与扁平化处理

轻易云平台提供了自动填充响应和扁平化处理功能。在元数据配置中,设置autoFillResponse: true和beatFlat: ["shopList"]可以简化数据处理过程,使得响应中的嵌套结构被自动展开为平面结构。这极大地减少了手动解析复杂JSON结构的工作量,提高了开发效率。

综上所述,通过合理配置元数据并利用轻易云平台强大的功能,可以高效地实现从马帮系统获取、清洗、转换并写入目标数据库的全过程。这不仅提升了数据集成的透明度和效率,也为后续的数据分析和业务决策提供了坚实的数据基础。

使用轻易云数据集成平台进行ETL转换与写入MySQLAPI接口的技术案例

在数据集成生命周期的第二步中,我们将已经集成的源平台数据进行ETL转换,转为目标平台 MySQLAPI接口所能够接收的格式,最终写入目标平台。以下是详细的技术实现过程。

元数据配置解析

我们使用如下元数据配置来完成ETL转换和写入操作:

{

"api": "batchexecute",

"effect": "EXECUTE",

"method": "SQL",

"number": "id",

"id": "id",

"name": "id",

"idCheck": true,

"request": [

{"field": "id", "label": "id", "type": "string", "value": "{id}"},

{"field": "platformSku", "label": "platformSku", "type": "string", "value": "{platformSku}"},

{"field": "title", "label": "title", "type": "string", "value": "{title}"},

{"field": "asin", "label": "asin", "type": "string", "value": "{asin}"},

{"field": "amazonsite", "label": "amazonsite", "type": "string",

"value":"{amazonsite}"},

{"field":"FNSKU","label":"FNSKU","type":"string","value":"{FNSKU}"},

{"field":"pictureUrl","label":"pictureUrl","type":"string","value":"{pictureUrl}"},

{"field":"price","label":"price","type":"string","value":"{price}"},

{"field":"shopIds","label":"shopIds","type":"string","value":"{shopIds}"},

{"field":"quantity","label":"quantity","type":"string","value":"{quantity}"},

{"field":"variationParentAsin","label":"variationParentAsin","type":"string","value":"{variationParentAsin}"},

{"field":"stockNameCN","label":"stockNameCN","type":"string","value":"{stockNameCN}"},

{"field":"stockSku","label":"stockSku","type":"string","value":"{stockSku}"},

{"field":"reservedCustomerorders","label":"reservedCustomerorders","type":

"string","value":

"{reservedCustomerorders}"},{"field":

"reservedFcTransfers",

"label":

"reservedFcTransfers",

"type":

"string",

"value":

"{reservedFcTransfers}"},{"field":

"reservedFcProcessing",

"label":

"reservedFcProcessing",

"type":

"string",

"value":

"{reservedFcProcessing}"},{"field":

"afnInboundWorkingQuantity",

"label":

"afnInboundWorkingQuantity",

"type":

"string",

"value":

"{afnInboundWorkingQuantity}"},{"field":

"onWayQuantity",

"label":

"onWayQuantity",

"type":

"string",

"value":

"{onWayQuantity}"},{"field":

"unsellablequantity",

"label":

"unsellablequantity",

"type":

"string",

"value:

"{unsellablequantity}"},{"field:

"afnreservedquantity",

"label:

"afnreservedquantity,

"type:

"string,

"value:

"{afnreservedquantity}"},{"field:

"openDateTime,

""label:

""openDateTime,

""type:

""string,

""value:

""{openDateTime}

"},{"field:

""pStatus,

""label:

""pStatus,

""type:

""string,

""value:

""{pStatus}

"},{"field:

""localStatus,

""label:

""localStatus,

""type:

""string,

""value:

""{localStatus}

"},{"field:

"s

aleId,"l

abel:"saleId,"t

ype:"s

tring,"v

alue:"{

saleId}

"},{"f

ield:"sale_assistant_id,"l

abel:"sale_assistant_id,"t

ype:"s

tring,"v

alue:"{

sale_assistant_id}

"},{"f

ield:"developerId,"l

abel:"developerId,"t

ype:"s

tring,"v

alue:"{

developerId}

"},{"f

ield:"developer_assistant_id,"l

abel:"developer_assistant_id,"t

ype:"s

tring,"v

alue:"{

developer_assistant_id}

"},{"f

ield:"stockId,"l

abel:"stockId,"t

ype:"s

tring,"v

alue:"{

stockId}

"},{"f

ield:"stockType,"l

abel:"stockType,"t

ype:"

s tring,"

v alue:"

{ stockType}"

},{" field:"

isChange,"

l abel:"

isChange,"

t ype:"

s tring,"

v alue:"

{ isChange}"

},{" field:"

refreshDate,"

l abel:"

refreshDate,"

t ype:"

s tring,"

v alue:"

{ refreshDate}"

},{" field:"

nameCN,"

l abel:"

nameCN,"

t ype:"

s tring,"

v alue:"

{ nameCN}"

},{" field:"

nameEN,"

l abel:"

nameEN,"

t ype:"

s tring,"

v alue:"

{ nameEN}"

},{" field:"

saleName,"

l abel:"

saleName,"

t ype:"

s tring,"

v alue:"

{ saleName}"

},{" field:"

saleAssistantName,"

l abel:"

saleAssistantName,"

t ype:"

s tring,"

v alue:"

{ saleAssistantName}"

},{" field: "

developerName, "

l abel: "

developerName, "

t ype: "

s tring, "

v alue: "

{

developerName }

}, {

"

f ield: "

d eveloperAssistantName, "

"

l abel: "

d eveloperAssistantName, "

"

t ype: "

s tring, "

"

v alue: "

{

d eveloperAssistantName }

}, {

"

f ield: "

tradeName, "

"

l abel: "

tradeName, "

"

t ype: "

s tring, "

"

v alue: "

{

tradeName }

}, {

"

f ield: "

shopName, "

"

l abel: "

shopName, "

"

t ype: "

s tring, "

"

v alue: "

{

shopName }

}, {

"

f ield: "

sign,

"

"

"

"

"

"

"

"

"

"

"

"

"

"

"

"

"

"""

"""

"""

"""

"""

"""

"""

"""

"""

"""

"""

"""

"""

"""数据请求与清洗

在数据请求与清洗阶段,我们从源系统获取原始数据,并对其进行必要的预处理,以确保数据质量和一致性。例如,对于字段price,我们可能需要将其标准化为统一的货币格式;对于字段openDateTime,我们可能需要将其转换为标准的时间戳格式。

数据转换

在数据转换阶段,我们根据元数据配置,将源系统的数据映射到目标系统所需的数据结构。以下是一个简单的数据映射示例:

[

{

“id”: “12345”,

“platformSku”: “SKU12345”,

“title”: “Product Title”,

“asin”: “B000123456”,

“amazonsite”: “amazon.com”,

“FNSKU”: “X000123456”,

“pictureUrl”: “http://example.com/image.jpg”,

“price”: “19.99”,

...

},

...

]这些字段将被映射到目标MySQL数据库中的相应列。

数据写入

在数据写入阶段,我们使用SQL语句将转换后的数据插入到目标MySQL数据库中。根据元数据配置中的main_sql字段,我们可以构建如下SQL语句:

REPLACE INTO msku_listing_info (id, platformSku, title, asin, amazonsite, FNSKU, pictureUrl, price, shopIds, quantity, variationParentAsin, stockNameCN, stockSku, reservedCustomerorders, reservedFcTransfers, reservedFcProcessing, afnInboundWorkingQuantity,onWayQuantity , unsellablequantity , afnreservedquantity , openDateTime , pStatus , localStatus , saleId , sale_assistant_id , developerId , developer_assistant_id , stockId , stockType , isChange , refreshDate , nameCN , nameEN , saleName , saleAssistantName , developerName , developerAssistant Name , trade Name , shop Name ) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)我们通过参数化查询来避免SQL注入风险,并确保数据安全性。

API接口调用

最后,我们通过API接口调用,将构建好的SQL语句发送到MySQL数据库进行执行。以下是一个示例API请求:

POST /batchexecute HTTP/1.1

Host:api.example.com

Content-Type:application/json

Authorization:Bearer <token>

{

“main_sql”:”REPLACE INTO msku_listing_info (id,platformSku,title,asin,amazonsite,FNSKU,pictureUrl,price,shopIds,quantity,variationParentAsin,stock Name CN , stock Sku , reserved Customer orders , reserved Fc Transfers , reserved Fc Processing , afn Inbound Working Quantity , on Way Quantity , unsellable quantity , afn reserved quantity , open Date Time , p Status , local Status , sale Id , sale _ assistant _ id , developer Id , developer _ assistant _ id , stock Id , stock Type , is Change , refresh Date , name CN , name EN , sale Name , sale Assistant Name , developer Name , developer Assistant Name ) VALUES ”,

“request”:[

{“id”:”12345”,…},

{“id”:”67890”,…},

…

]

}

通过上述步骤,我们成功地将源平台的数据进行ETL转换,并写入到目标MySQL数据库中。